This article was originally published on Fool.com. All figures quoted in US dollars unless otherwise stated.

Nvidia (NASDAQ: NVDA) had a market capitalisation of $360 billion at the start of 2023. Less than two years later, it's now worth over $3.4 trillion. Although the company supplies graphics processing units (GPUs) for personal computers and even cars, the data center segment has been the primary source of its growth over that period.

Nvidia's data center GPUs are the most powerful in the industry for developing and deploying artificial intelligence (AI) models. The company is struggling to keep up with demand from AI start-ups and the world's largest technology giants. While that's a great thing, there is a potential risk beneath the surface.

Nvidia's financial results for its fiscal 2025 second quarter (which ended on July 28) showed that the company increasingly relies on a small handful of customers to generate sales. Here's why that could lead to vulnerabilities in the future.

GPU ownership is a rich company's game

According to a study by McKinsey and Company, 72% of organisations worldwide are using AI in at least one business function. That number continues to grow, but most companies don't have the financial resources (or the expertise) to build their own AI infrastructure. After all, one of Nvidia's leading GPUs can cost up to $40,000, and it often takes thousands of them to train an AI model.

Instead, tech giants like Microsoft, Amazon, and Alphabet buy hundreds of thousands of GPUs and cluster them inside centralised data centers. Businesses can rent that computing capacity to deploy AI into their operations for a fraction of the cost of building their own infrastructure.

Cloud companies like DigitalOcean are now making AI accessible to even the smallest businesses using that same strategy. DigitalOcean allows developers to access clusters of between one and eight Nvidia H100 GPUs, enough for very basic AI workloads.

Affordability is improving. Nvidia's new Blackwell-based GB200 GPU systems are capable of performing AI inference at 30 times the pace of the older H100 systems. Each individual GB200 GPU is expected to sell for between $30,000 and $40,000, which is roughly the same price as the H100 when it was first released, so Blackwell offers an incredible improvement in cost efficiency.

That means the most advanced, trillion-parameter large language models (LLMs) — which have previously only been developed by well-resourced tech giants and leading AI start-ups like OpenAI and Anthropic — will be financially accessible to a broader number of developers. Still, it could be years before GPU prices fall enough that the average business can maintain its own AI infrastructure.

The risk for Nvidia

Since only a small number of tech giants and top AI start-ups are buying the majority of AI GPUs, Nvidia's sales are extremely concentrated at the moment.

In the fiscal 2025 second quarter, the company generated $30 billion in total revenue, which was up 122% from the year-ago period. The data center segment was responsible for $26.3 billion of that revenue, and that number grew by a whopping 154%.

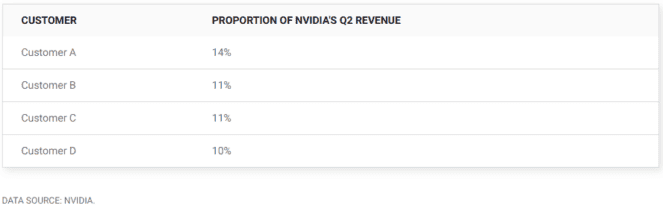

According to Nvidia's 10-Q filing for the second quarter, four customers (who were not identified) accounted for almost half of its $30 billion in revenue:

Nvidia only singles out the customers who account for 10% or more of its revenue, so it's possible there were other material buyers of its GPUs that didn't meet the reporting threshold.

Customers A and B accounted for a combined 25% of the company's revenue during Q2, which ticked higher from 24% in the fiscal 2025 first quarter just three months earlier. In other words, Nvidia's revenue is becoming more — not less — concentrated.

Here's why that could be a problem. Customer A spent $7.8 billion with Nvidia in the last two quarters alone, and only a tiny number of companies in the entire world can sustain that kind of spending on chips and infrastructure. That means even if one or two of Nvidia's top customers cut back on their spending, the company could suffer a loss in revenue that can't be fully replaced.

Nvidia's mystery customers

Microsoft is a regular buyer of Nvidia's GPUs, but a recent report from one Wall Street analyst suggests the tech giant is the biggest customer of Blackwell hardware (which starts shipping at the end of this year) so far. As a result, I think Microsoft is Customer A.

Nvidia's other top customers could be some combination of Amazon, Alphabet, Meta Platforms, Oracle, Tesla, and OpenAI. According to public filings, here's how much money some of those companies are spending on AI infrastructure:

- Microsoft allocated $55.7 billion to capital expenditures (capex) during fiscal 2024 (which ended June 30), and most of that went toward GPUs and building data centers. It plans to spend even more in fiscal 2025.

- Amazon's capex is on track to come in at over $60 billion during calendar 2024, which will support the growth it's seeing in AI.

- Meta Platforms plans to spend up to $40 billion on AI infrastructure in 2024 and even more in 2025, in order to build more advanced versions of its Llama AI models.

- Alphabet is on track to allocate around $50 billion to capex this year.

- Oracle allocated $6.9 billion toward AI capex in its fiscal 2024 year (which ended May 31), and it plans to spend double that in fiscal 2025.

- Tesla just told investors its total expenditures on AI infrastructure will top $11 billion this year, as it brings 50,000 Nvidia GPUs online to improve its self-driving software.

Based on that information, Nvidia's revenue pipeline looks robust for at least the next year. The picture is a little more unclear as we look further into the future because we don't know how long those companies can keep up that level of spending.

Nvidia CEO Jensen Huang thinks data center operators will spend $1 trillion building AI infrastructure over the next five years. If he's right, the company could continue growing well into the late 2020s. But there is competition coming online that could steal some market share.

Advanced Micro Devices released its own AI data center GPUs last year, and it plans to launch a new chip architecture to compete with Blackwell in the second half of 2025. Plus, Microsoft, Amazon, and Alphabet have designed their own data center chips, and although it could take time to erode Nvidia's technological advantage, that hardware will eventually be more cost-effective for them to use.

None of this is an immediate cause for concern for Nvidia's investors, but they should keep an eye on the company's revenue concentration in the upcoming quarters. If it continues to rise, that might create a higher risk of a steep decline in sales at some point in the future.

This article was originally published on Fool.com. All figures quoted in US dollars unless otherwise stated.